Understanding Adaptive Machine Translation

A deep dive into the mechanics of dynamic adaptive MT technology which differs dramatically from static MT systems and is an agile, highly responsive, and flexible approach to making MT work for professional & enterprise use.

Machine translation has been around for over 70 years and has made steady progress in tackling what many consider to be one of the most difficult challenges in computing and artificial intelligence. We have seen the approach to this challenge change and evolve, and MT has become much more widely used, especially since the advent of neural MT.

The deep learning neural net methods used in Neural MT have led to significant improvements in output quality, especially in terms of improved fluency, and have encouraged much wider use of machine translation in global business-driving applications.

While the momentum of Neural MT is well understood and recognized as a major advance in state-of-the-art (SOTA) machine translation, it is surprising that Adaptive MT has not had a greater impact.

This is especially true in the enterprise and professional translation market, where Adaptive MT can address specific and unique business needs much more effectively than alternatives. This paper explains why, even in the age of large language models, it remains a critical technology for global enterprises and professional users.

To better understand the value of Adaptive MT systems, it is useful to present a contrast to the typical generic static systems that most are familiar with.

The Typical Generic Static MT Engine

Generic and relatively static MT engines (i.e., they do not change how a phrase is translated until the next major update of the engine) are designed to be used by millions of people. The effort to build a generic baseline engine is significant in terms of cost, complexity, and the amount of data required, so it is not done often.

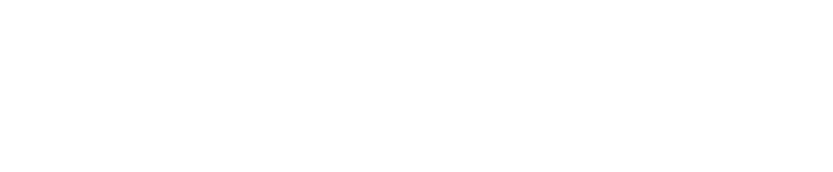

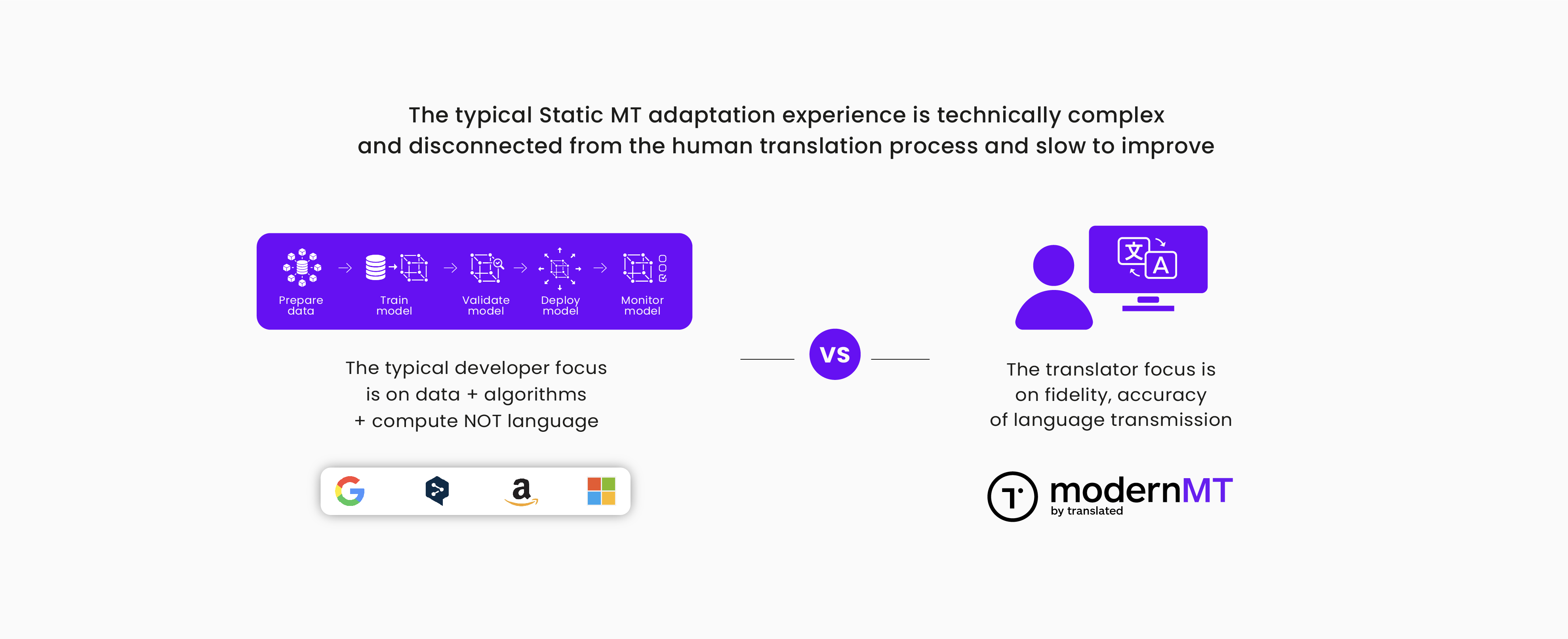

The diagram above shows the typical development and production process for developing a static engine.

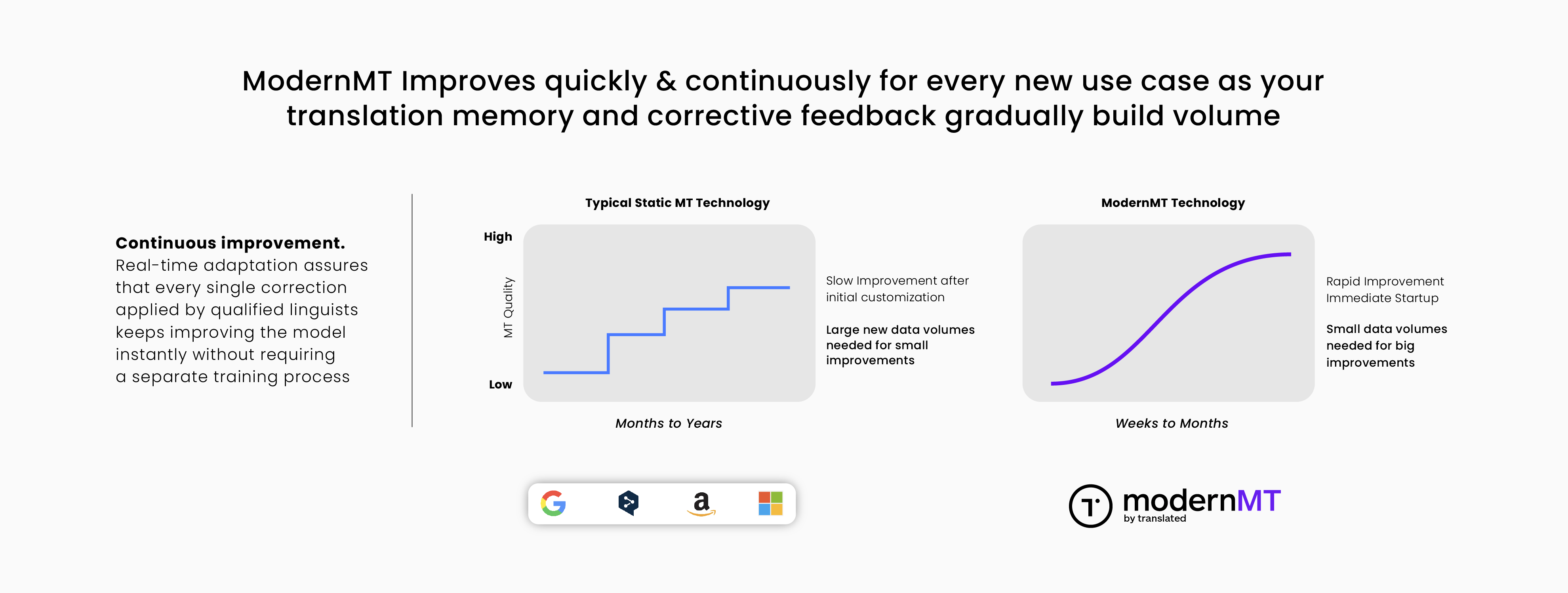

A key characteristic of these static engines is that they do not evolve quickly because they require large new data sources to drive improvements, data that is not readily available, and thus generic static engines are typically updated no more than once a year.

On any given day, the major generic MT engine portals (Google, Microsoft, Baidu) allow hundreds of millions of people to translate material of interest. We have already reached the point where 99% or more of the translation done on the planet is done by computers, thanks to these generic engines.

However, the requirements for MT use and performance in professional or business environments are very specific and usually require that generic engines be modified and optimized for company- or project-specific terminology and linguistic style. Optimizing for corporate terminology and content is called customization or adaptation.

For example, if we consider the needs of IKEA, Pfizer, Airbnb, and Samsung, it is clear that they all have very different needs in terms of subject domain focus, style, and critical terminology and would be better served by enterprise-optimized MT than by a generic, one-size-fits-all MT solution.

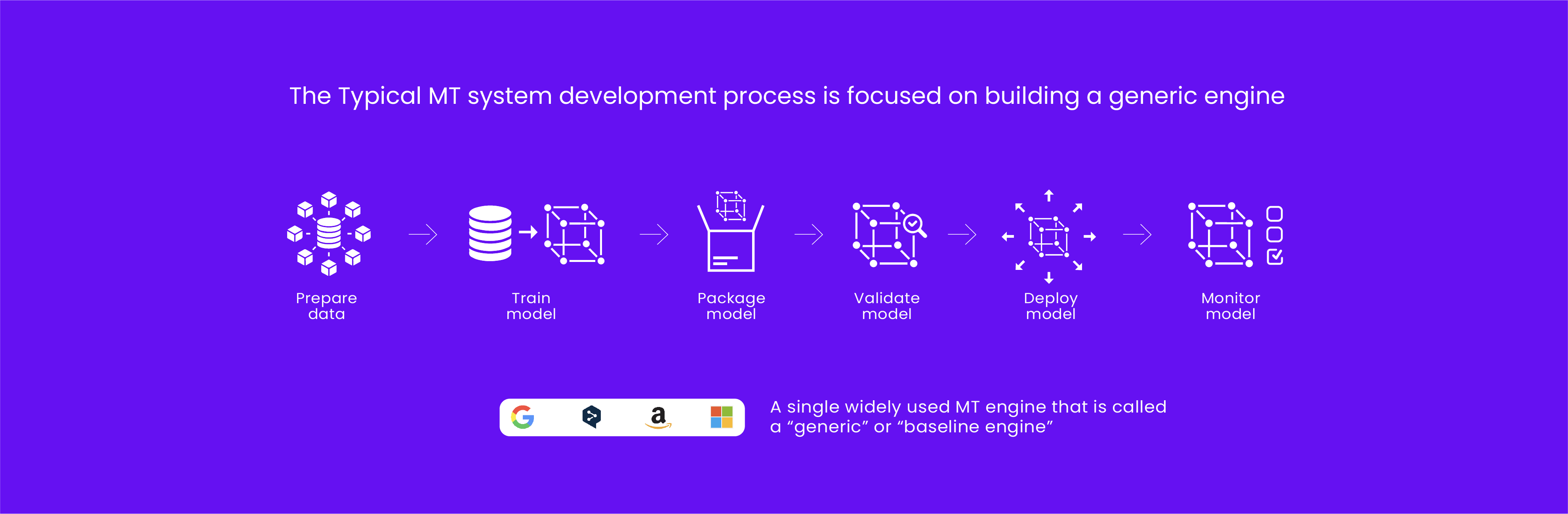

The typical MT customization process using static engines is described below. The customization effort and process is a scaled-down version of the generic engine development process. Typically, it requires the collection and incorporation of enterprise translation memory relevant to the use case into the generic model via a scaled-down "training process."

This effort results in limited or coarse optimization if sufficient training data resources are available. The optimization is considered coarse because the training data available to perform the optimization is typically minuscule compared to the base data used in the generic engine. There is little value in training an engine with limited data as there would be no difference in performance from the generic baseline.

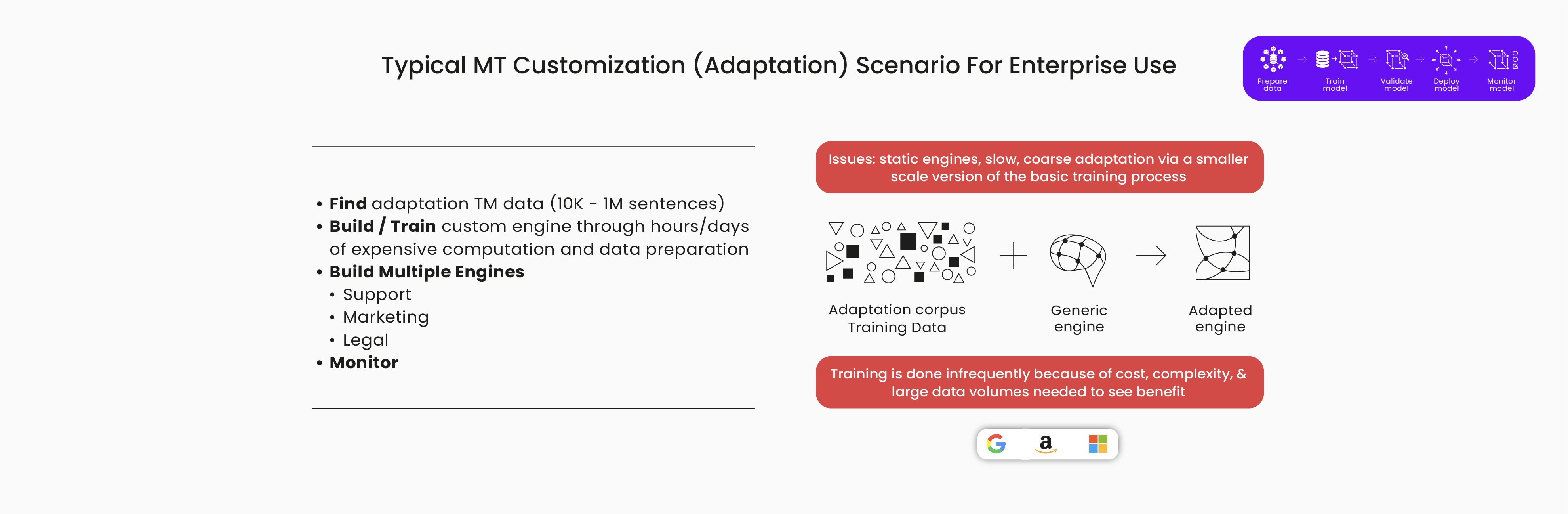

Thus, many attempts to use MT in professional settings face data scarcity problems. Limited data availability limits and reduces the potential impact of adaptation. To further complicate matters, it is usually necessary to build separate engines for each different use case, e.g., customer support, marketing, and legal would all be optimized separately.

Since many global enterprises have multiple product lines and businesses that cross multiple domains (TVs, semiconductors, PCs, home appliances) this will often result in a large number of MT engines needed to cover global business needs. As a result, it is often necessary to manage and maintain many MT engines. This management burden is often not understood at the outset when localization teams embark on their MT journey. This complexity also creates a lot of room for error and misalignment as data alignment can easily get out of sync over time.

Over time, many enterprise MT initiatives can be characterized by several problems that are common to users of these static MT systems. These problems are summarized below in order of frequency and importance:

- Ongoing scarcity of training data: Static models require a lot of data to drive improvements. There is little value in retraining a model until new or corrective data volumes reach critical levels.

- Tedious MTPE experience: Post-editors must repeatedly correct the same errors because these MT engines do not regularly improve, often leading to worker dissatisfaction.

- MT model management overhead and complexity: There are too many models to manage and maintain, which can lead to misalignment errors.

- Communication issues: Typically, between the MT development team and localization team members and translators, who have very different views of the overall process.

- Context insensitivity: Sentence- and document-level context is typically missing from these custom models.

The Adaptive MT Experience

The static MT approach makes sense for large ad-supported portals where the majority (99%+) of the millions of users will use the MT systems without attempting modification or customization.

In contrast, the adaptive MT approach makes more sense for those enterprise and professional translators who almost always attempt to modify the behavior of the generic model to meet the specific and unique needs of a business use case.

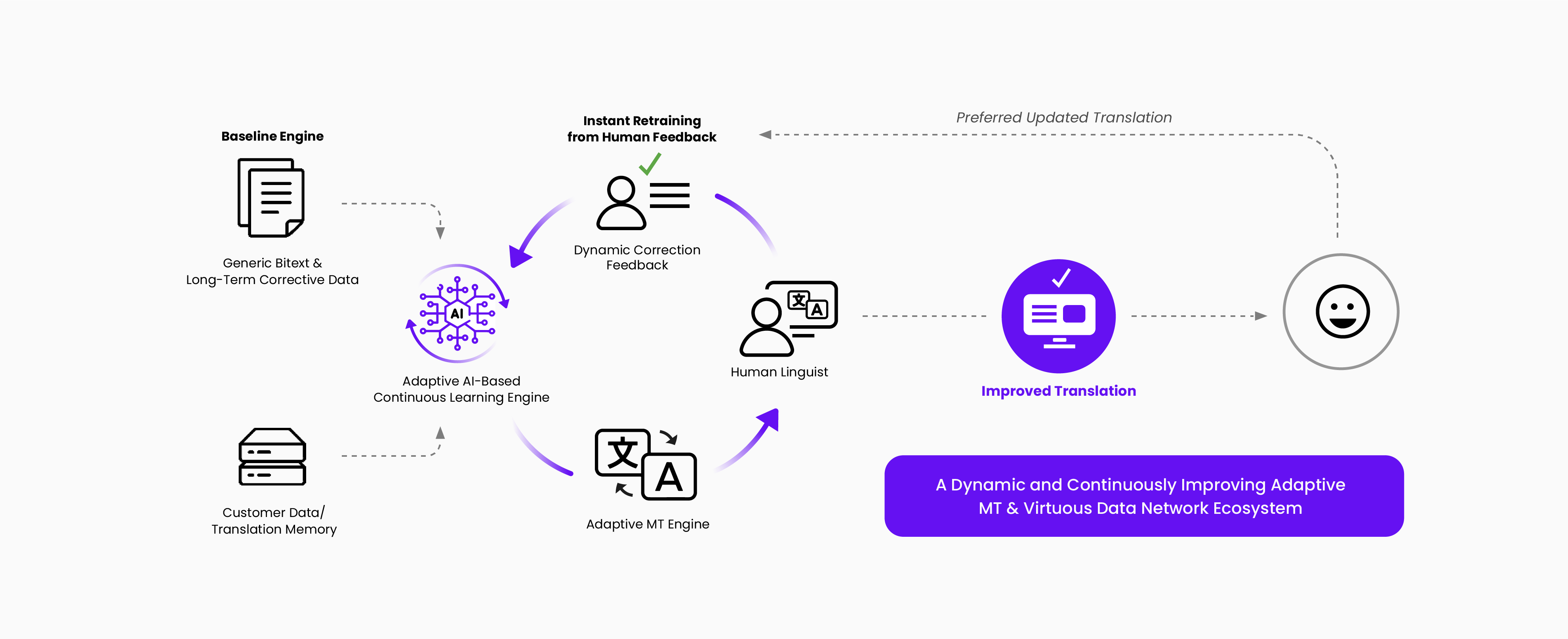

ModernMT is an adaptive MT technology solution designed from the ground up to enable and encourage immediate and continuous adaptation to changing business needs. It is designed to support and enhance the professional translator's work process and increase translation leverage and productivity. This is the fundamental difference between an adaptive MT solution like ModernMT and static generic MT systems.

“Simplicity is the ultimate sophistication” Leonardo da Vinci

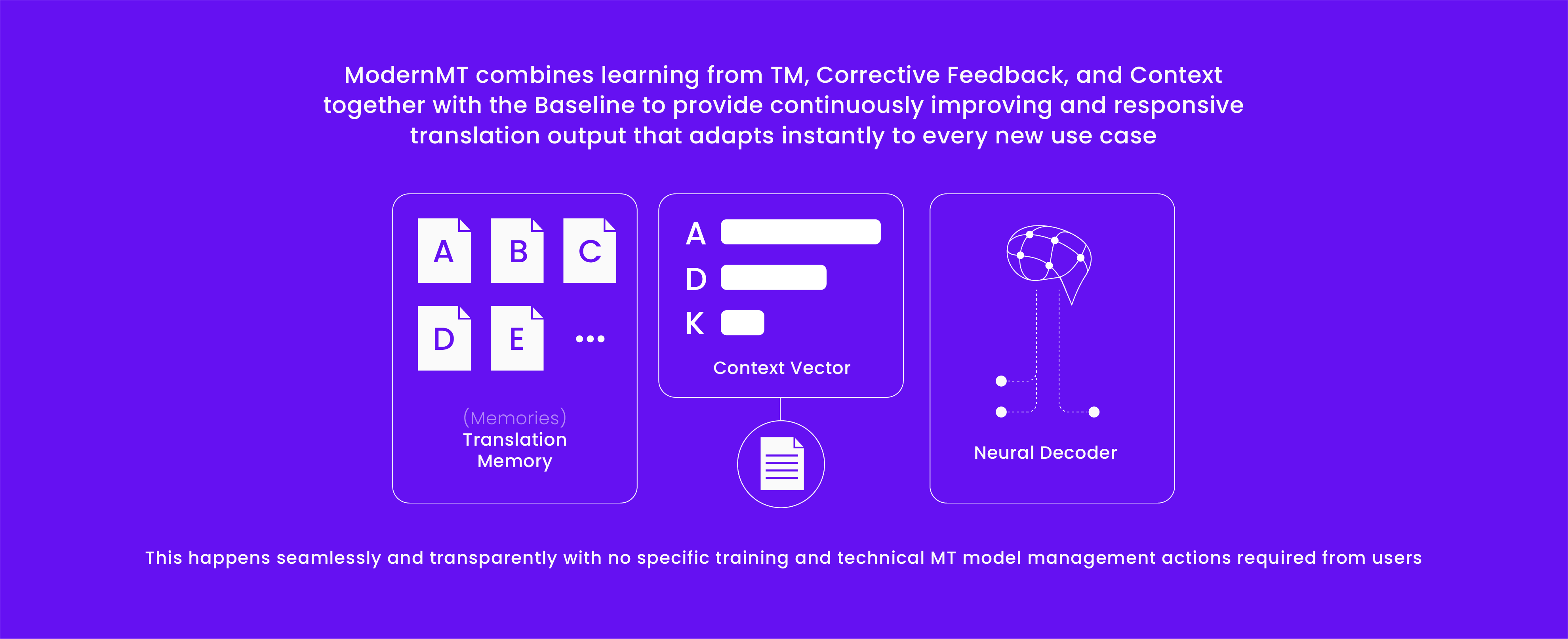

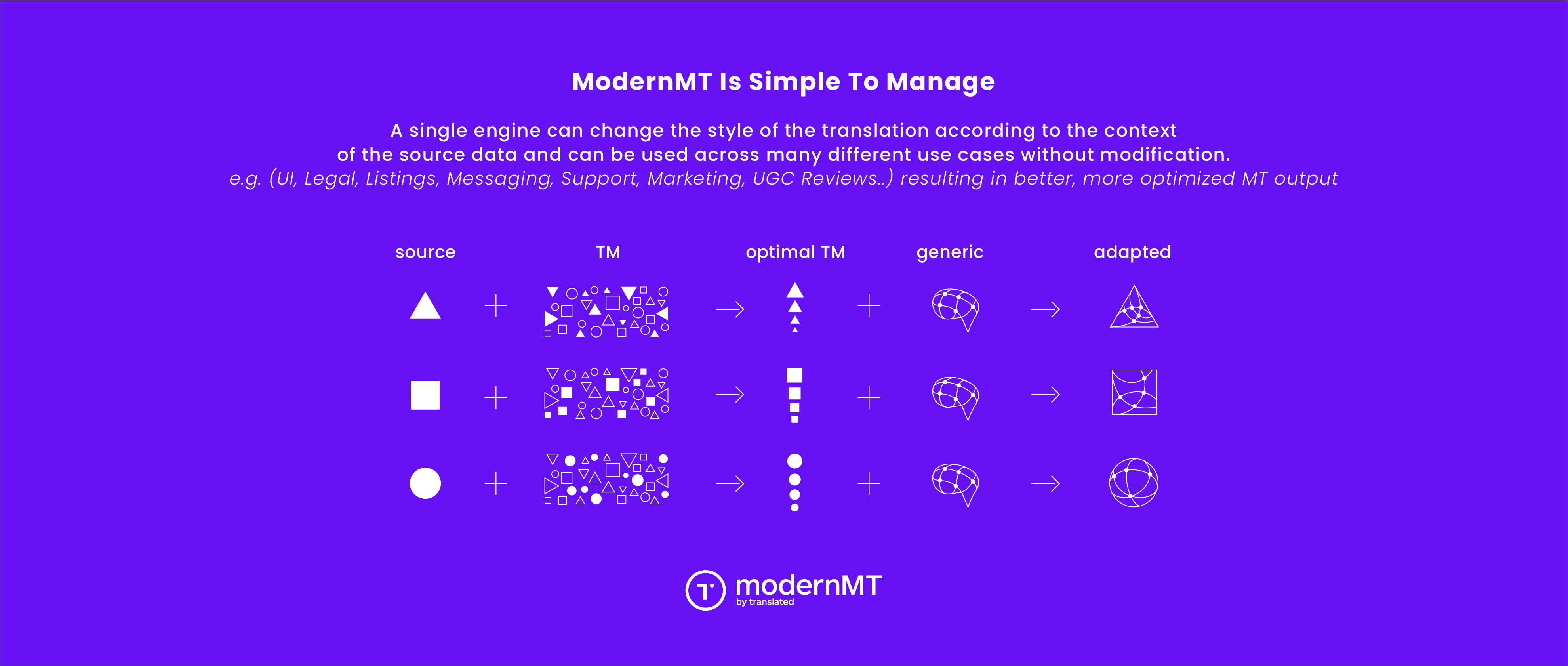

While the ModernMT adaptive MT engine also has a basic generic engine underlying its capabilities, it is designed to work instantly with any available translation memory resources and to learn instantly from corrective linguistic feedback.

This is done without any user intervention or action to "train" the system. The user simply points to any available TM and it is used if it is relevant to the translation task at hand. Thus, while many struggle to use MT in an environment where use case requirements are constantly changing, this adaptive MT system uses memories, corrective feedback, and overall context gathered from both the memories and the overall document.

As the use of MT grows in the enterprise, the benefits of an adaptive MT infrastructure continue to accrue, as the management and maintenance of the many production MT systems require nothing more than the organization of TM assets and the provision of continuous corrective feedback to drive continuous improvements in system performance.

Thus, content creators and linguistically informed users can be the primary drivers of the ongoing system evolution. Because the underlying continuous improvement process is always active in the background, there is no need for any technology process management by these users. Translation issues that may arise in widespread use, can be quickly identified and corrected by linguists without the need for support from MT technology experts.

New use cases of large-scale deployments can be rapidly deployed by targeting human translation efforts on the most relevant and statistically present content. Adaptive MT technology allows for evolutionary approaches that ensure continuous improvement.

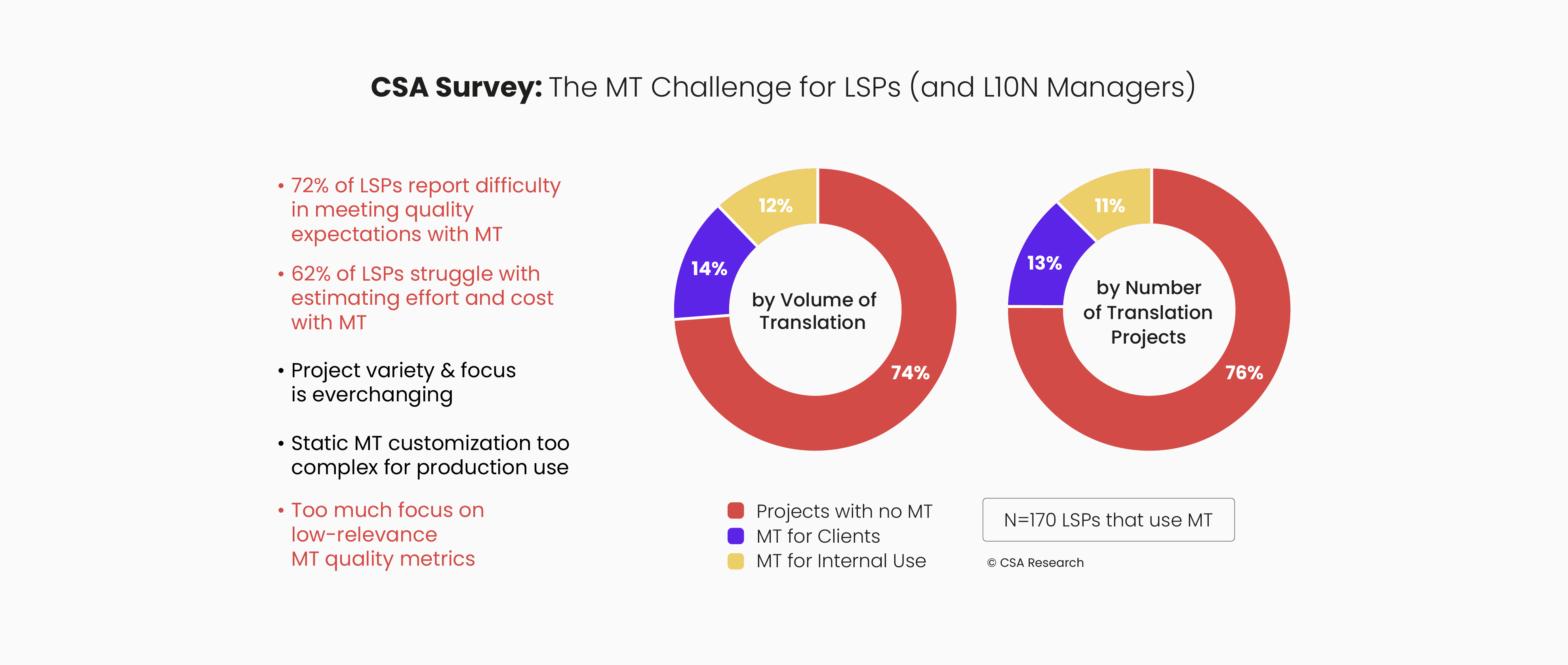

Independent market research points to some key factors that are often overlooked by those attempting to deploy MT in professional and enterprise environments. Surveys conducted by Common Sense Advisory and Nimdzi show that most LSPs/Enterprises struggle to deploy MT in production for three key reasons:

- Inability to produce MT output at the required quality levels. Most often due to a lack of training data needed for meaningful improvement.

- Inability to properly estimate the effort and cost of deploying MT in production.

- The ever-changing needs and requirements of different projects with static MT that cannot adapt easily to new requirements create a mismatch of skills, data, and competencies.

Given these difficulties, it is worth considering the key requirements for a production-ready MT system. Why do so many still fail with MT?

One reason for failure is that many LSPs and localization managers have used automated metrics to select the "best" MT system for their production needs without having any understanding of how MT engines improve and evolve. Automated MT quality metrics such as BLEU, Edit Distance, hLepor, and COMET are used to select the "best" MT systems for production work.

These scores are all useful for MT system developers to tune and improve MT systems, but globalization managers who use this approach to select the "best" system may overlook some rather obvious shortcomings of this approach to optimal MT selection.

Ideally, the "best" MT system would be determined by a team of competent translators who would run directly relevant content through the MT system after establishing a structured and repeatable evaluation process. This is slow, expensive, and difficult, even if only a small sample of 250 sentences is evaluated.

Thus, automated measurements (metrics) that attempt to score translation adequacy, fluency, precision, and recall must often be used. They attempt to do what is best done by competent bilingual humans. These scoring methodologies are always an approximation of what a competent human assessment would determine, and can often be incorrect or misleading, especially with static Test Sets.

This approach of ranking different MT systems by scores based on opaque and possibly irrelevant reference test sets has several problems. These problems include:

- These scores do not represent production performance.

- These scores are typically obtained on static MT systems and do not capture a system's ability to improve.

- The results are an OLD snapshot of a constantly changing scene. If you change the angle or focus, the results would change.

- Small differences in scores are often meaningless, and most users would be hard-pressed to explain what these small numerical differences might mean.

- The score is an approximate measure of system performance at a historical point in time and is generally not a reliable predictor of future performance.

- These scores are unable to capture the dynamic evolution typical of an adaptive MT system.

- Generic, static systems often score higher on these rankings initially but this does not reflect that they are much more difficult to tune and adapt to unique, company-specific requirements.

As a result, the selection of MT systems for production use based on these score-based rankings can often be suboptimal or simply wrong. The use of automated metrics to select the "best" MT system is done to manage what is essentially a black-box technology that few understand. NMT system performance is mysterious and often inscrutable. Scores, as misleading as they may be, make it easier to justify purchase decisions and document buyer due diligence.

But the failure of so many LSPs with MT technology suggests that this approach may not be the best way forward to achieve production-ready and production-grade MT technology. So what criteria are more relevant in the context of identifying production-grade MT technology? The following criteria are much more likely to lead to technology choices that make long-term sense. For example:

- The speed with which an MT system can be tuned and adapted to unique corporate content. Systems that require complex training efforts by technology specialists will slow the globalization team’s responsiveness.

- The ease with which the system can be adapted to unique corporate needs The need to have expensive consulting resources or dedicated MT technology staff on hand and ready to go greatly reduces the agility and responsiveness of the globalization team.

- An automated and robust MT model improvement process as corrective feedback and improved data resources are brought to bear.

- The complexity of MT system management increases exponentially when multiple vendors are used as they may have different maintenance and optimization procedures. This suggests that it is better to focus on one or two partners and build expertise through deep engagement.

- The ability of a system to enable startup work even if little or no data is available.

- A straightforward process to correct any problematic or egregious translation errors. Many large static systems need large volumes of correction data to override such errors.

- The availability of expert resources to manage specialized enterprise use cases and trained human resources (linguists) to help prime and prepare MT systems for large-scale deployment.

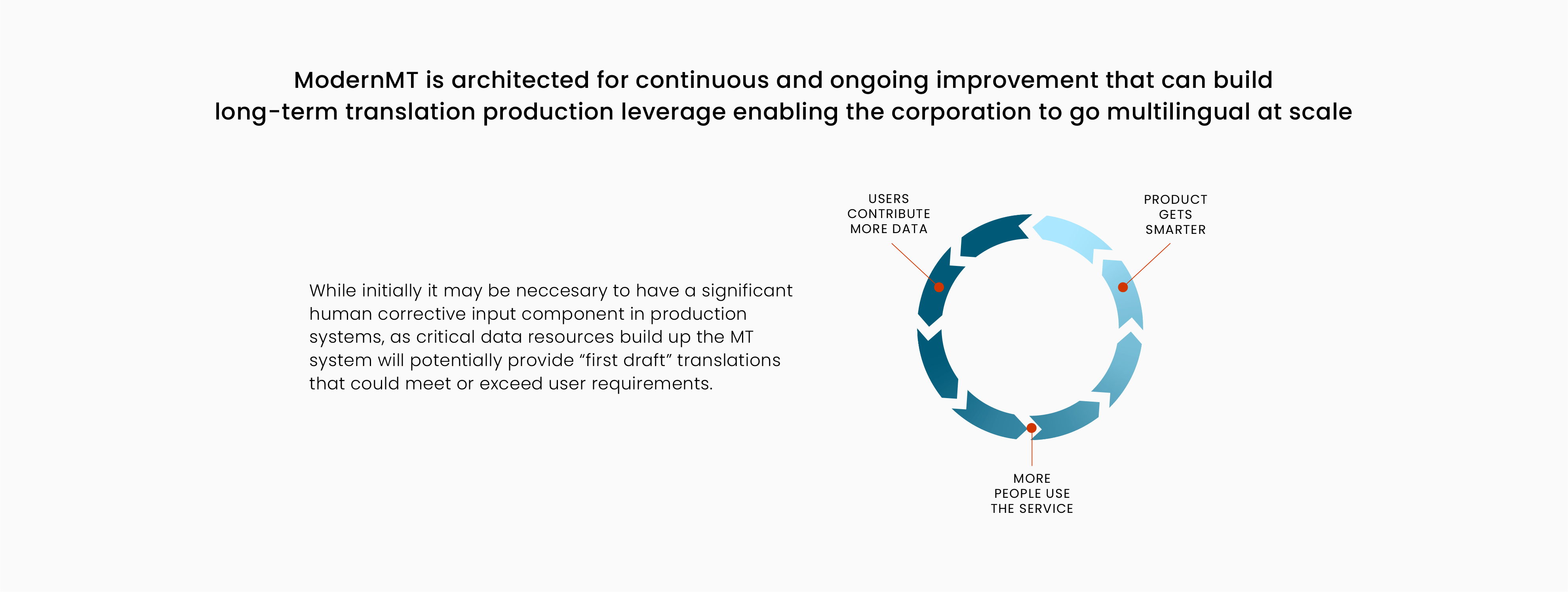

It is now common knowledge that machine learning-based AI systems are only as good as the data they use. One of the keys to long-term success with MT is to build a virtuous data collection system that refines MT performance and ensures continuous improvement. The existence of such a system would encourage more widespread adoption and enable the enterprise to become multilingual at scale. This would allow the enterprise to break down the barrier of language as a barrier to global business success.

It is easy to assume that all adaptive MT systems employ the same technological strategy. This is not the case and real-time, in-context adaptation can be architected in different ways. In looking more closely at the very few other adaptive MT solutions in the market it is clear that dynamic adaptation can be done using different technological strategies. But as more buyers understand that the responsiveness of the MT system matters more than a static COMET score on a random test set, the evaluation strategies will change. It will be more useful to see which systems change most easily with the least amount of effort.

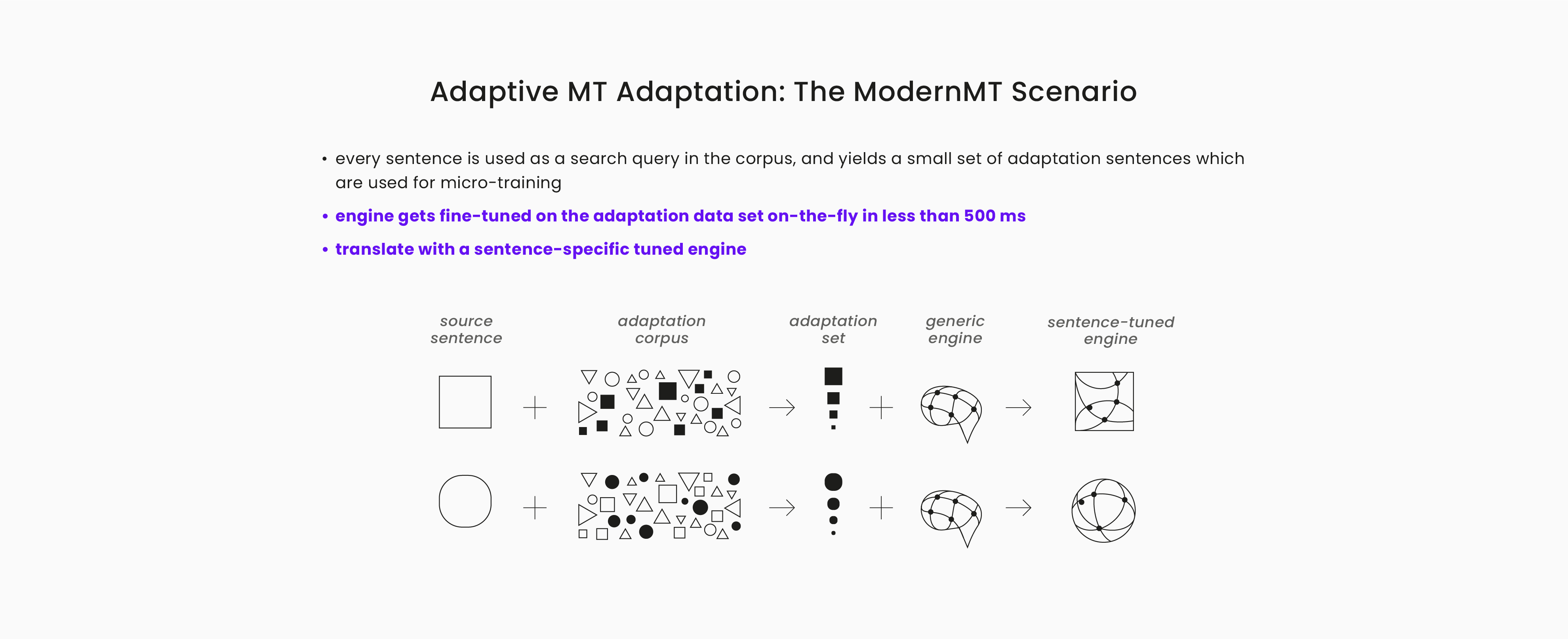

The ModernMT approach to adaptation is to bring the encoding and decoding phases of model deployment much closer together, allowing dynamic and active human-in-the-loop corrective feedback, that is not so different from the in-context corrections and prompt modifications we are seeing with large language models.

In the future, as Large Language Models (LLMs) become more cost-effective, scalable, secure, and controllable it is possible that they could be used to further enhance SOTA adaptive MT models by improving both core translation quality and output fluency either as stand-alone solutions or more likely as hybrid models that work with MT purpose-focused models that are yet to come.

While LLMs have shown they can perform well in some high-resource languages, the initial evaluations also show that they perform much worse in lower-resource languages. LLMs are not optimized for the translation task, so this is expected. Since LLMs depend on finding large caches of data in each language this is not a problem that will be solved easily and quickly. The data volumes they need to improve are substantial and often not easily found.

In contrast, ModernMT just announced support for 200 languages that can all immediately benefit from the continuous improvement infrastructure that underlies the technology, and begin the steady quality improvement process that is described in this article.

However, it is increasingly clear that systems that can improve performance in real-time and respond quickly and efficiently y to informed and expert human feedback are very likely to be the preferred approach to solve the challenge of automated language translation at scale.

ModernMT is a product by Translated.